@article{ma-papsp,

author = {Sarkar, Aditya and Li, Yi and Cheng, Jiacheng and Mishra, Shlok and Vasconcelos, Nuno},

journal = {ArXiv preprint},

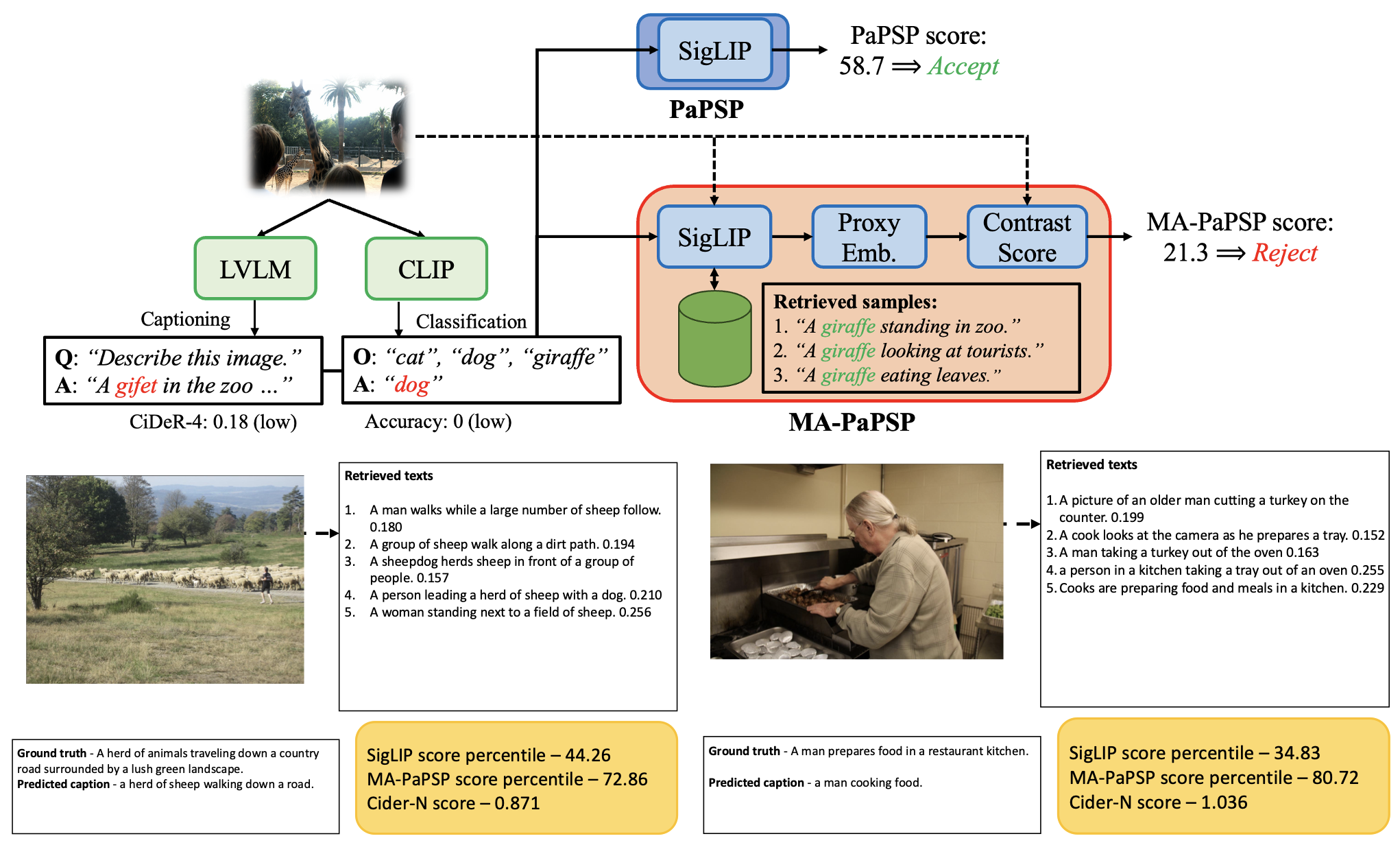

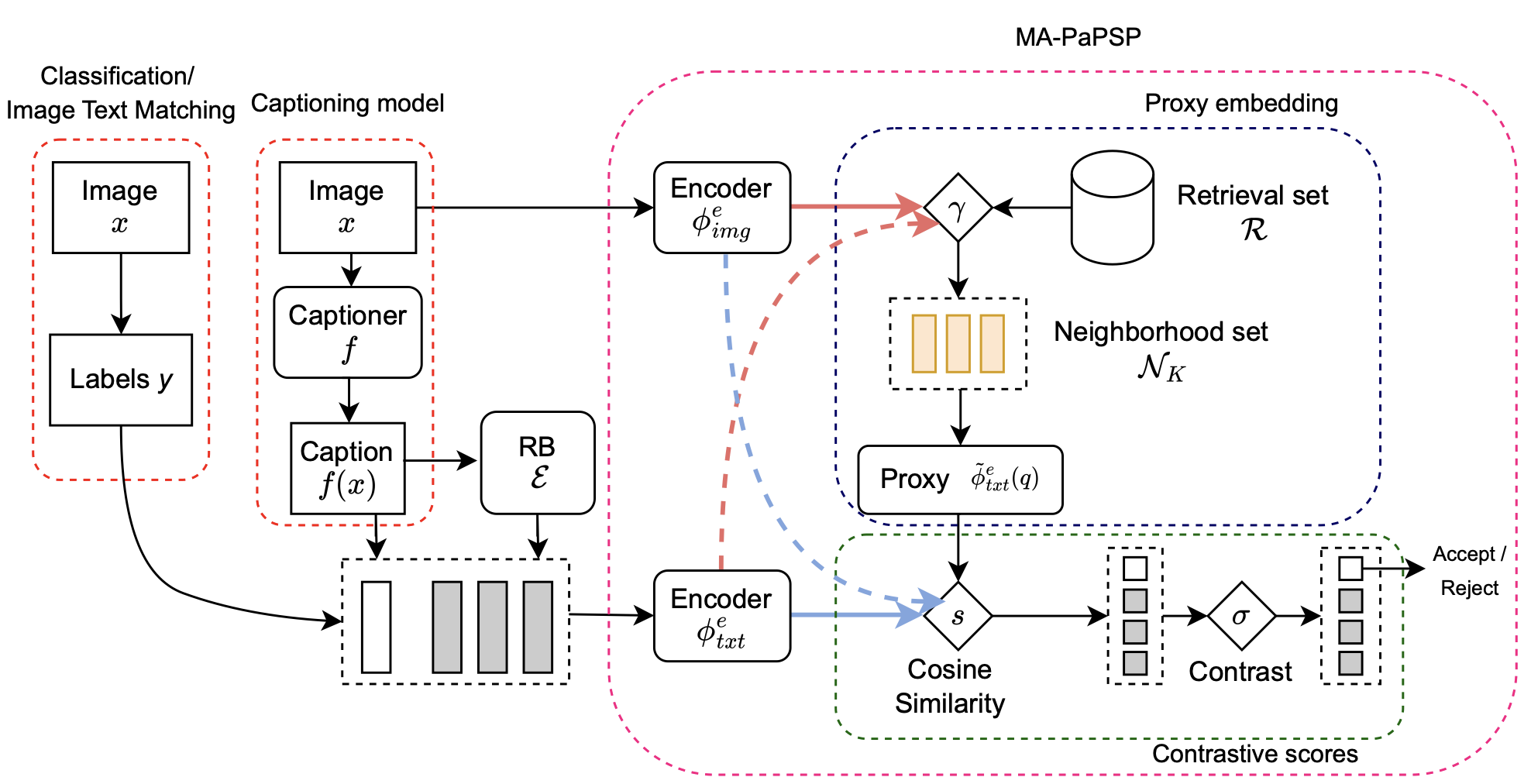

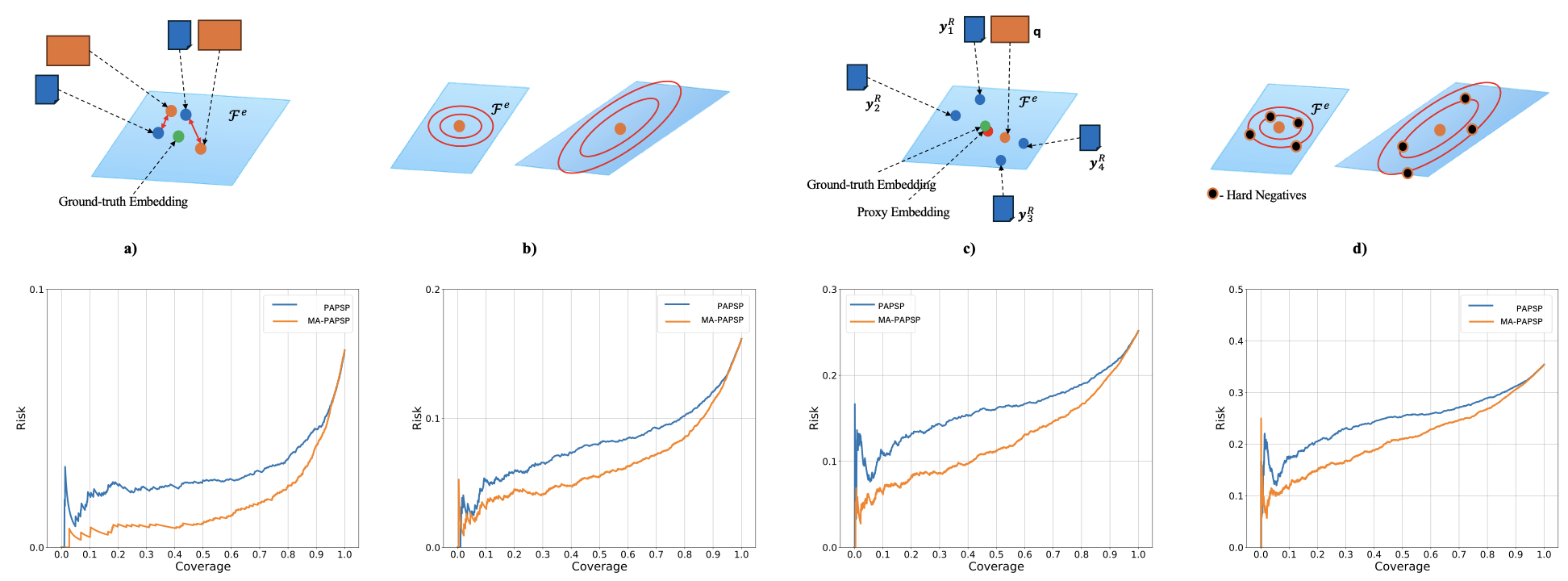

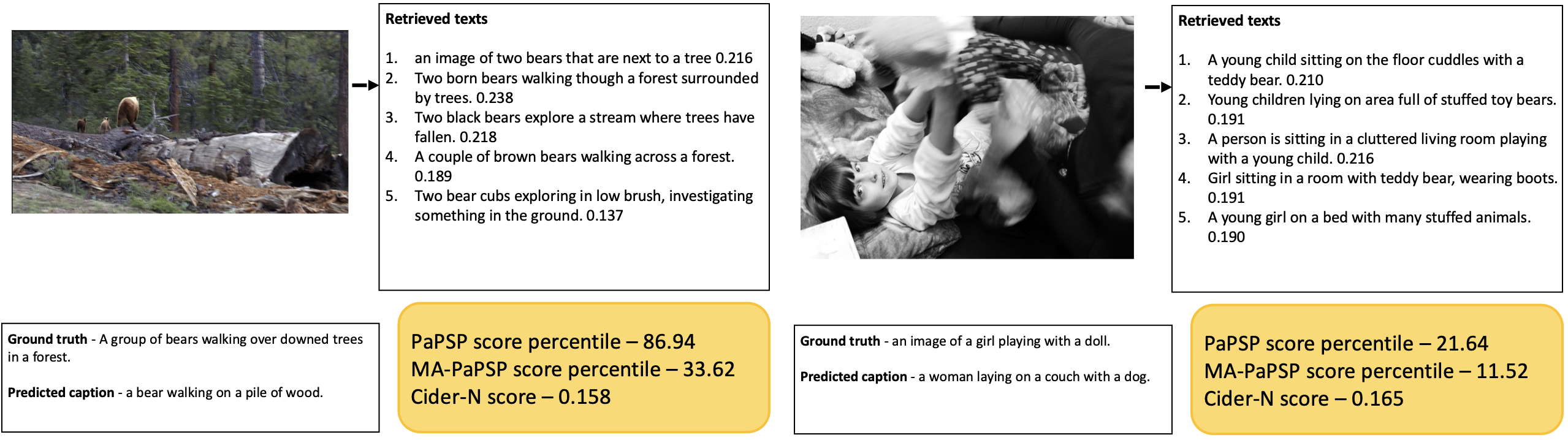

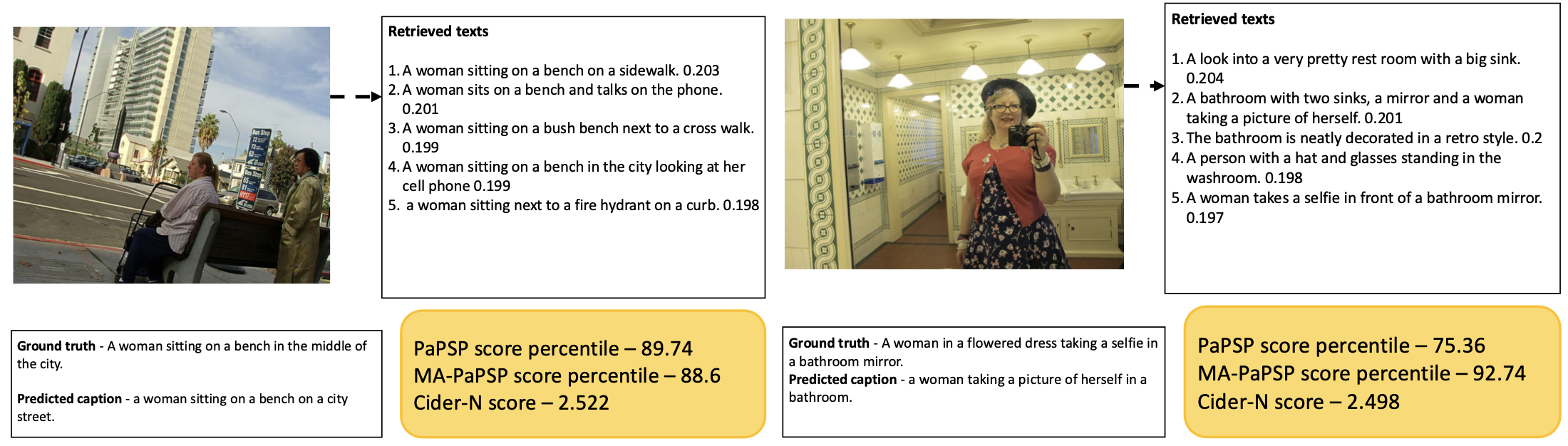

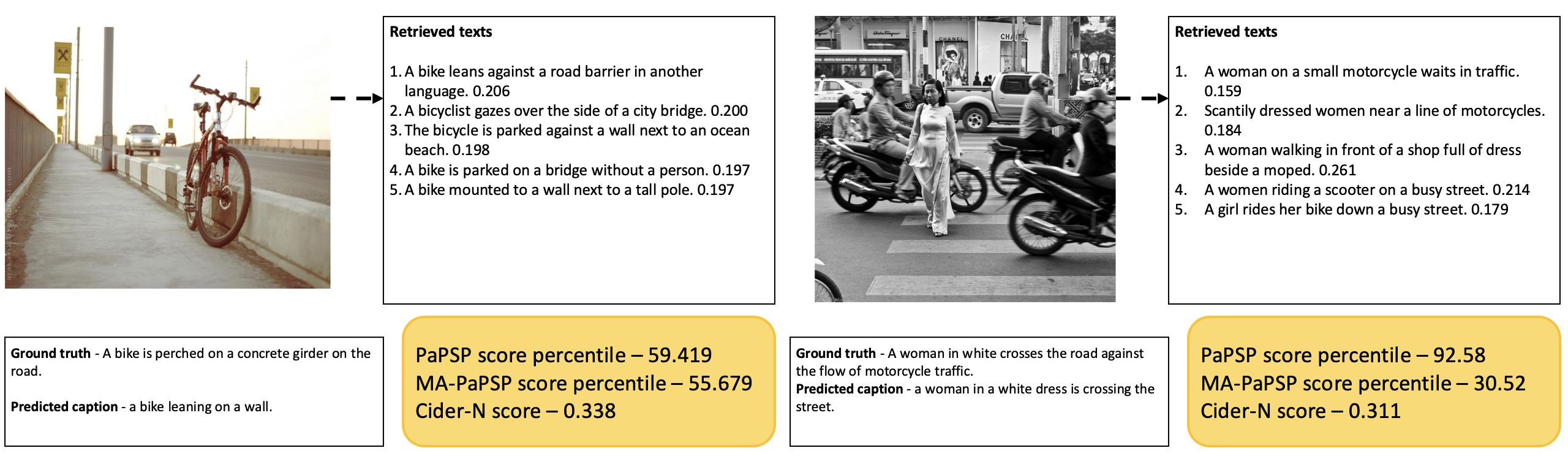

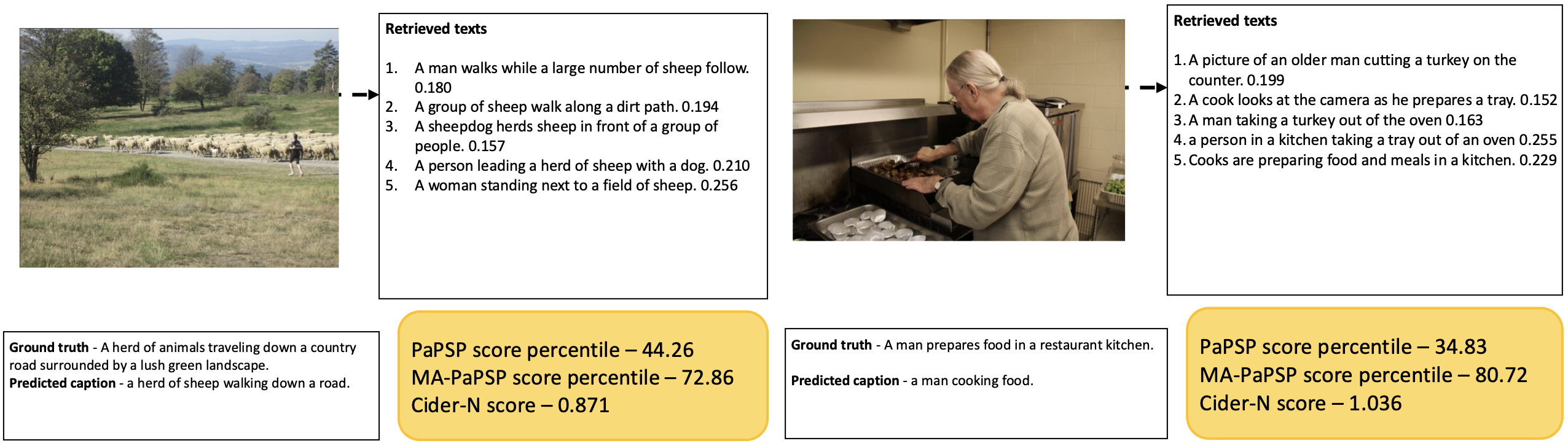

title = {Leveraging Data to Say No: Memory Augmented Plug-and-Play Selective Prediction},

url = {https://arxiv.org/abs/2601.22570},

volume = {abs/2601.22570},

year = {2026}

}